Testing Google's AI Powered Search Results for Political and Sensitive Topics

A preview of what digital progressives can expect in search results

Last week when Google launched its Search Generative Experience (SGE) preview through Google Labs, I immediately began testing how it handles topics central to current education policy debates and progressive advocacy work.

To evaluate SGE's handling of contentious education topics, I simulated initial discovery searches using real queries I've encountered through my work monitoring public school disinformation trends.

Google had stated previously that it will handle Your Money Your Life searches with additional care, and in some cases decline to provide a response. YMYL is the categorical container for searches and content that are designated to higher stakes content, including civics and social issues, and anything that could impact someone’s future happiness, health, and financial stability.

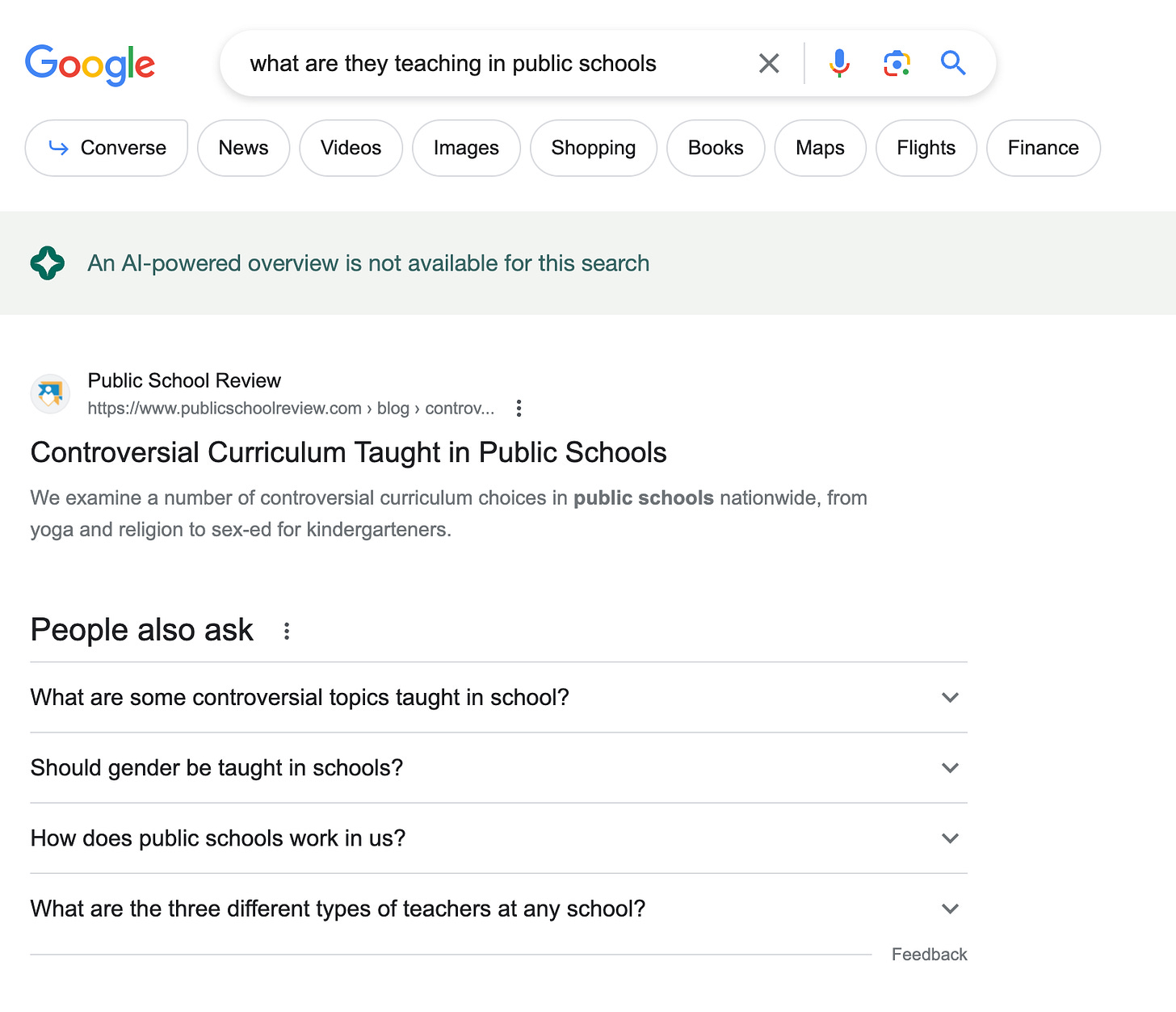

I found that Google showed answers for most of the searches I conducted. There were a few cases where I asked a question or commanded a prompt following a sensitive YMYL subject and Google started to generate an AI overview response, and decided not to. And while there were a few cases where Google did not provide an AI powered overview for the search, due to the nature of the search, I found that I was able to generate an answer for the same question in a follow up question.

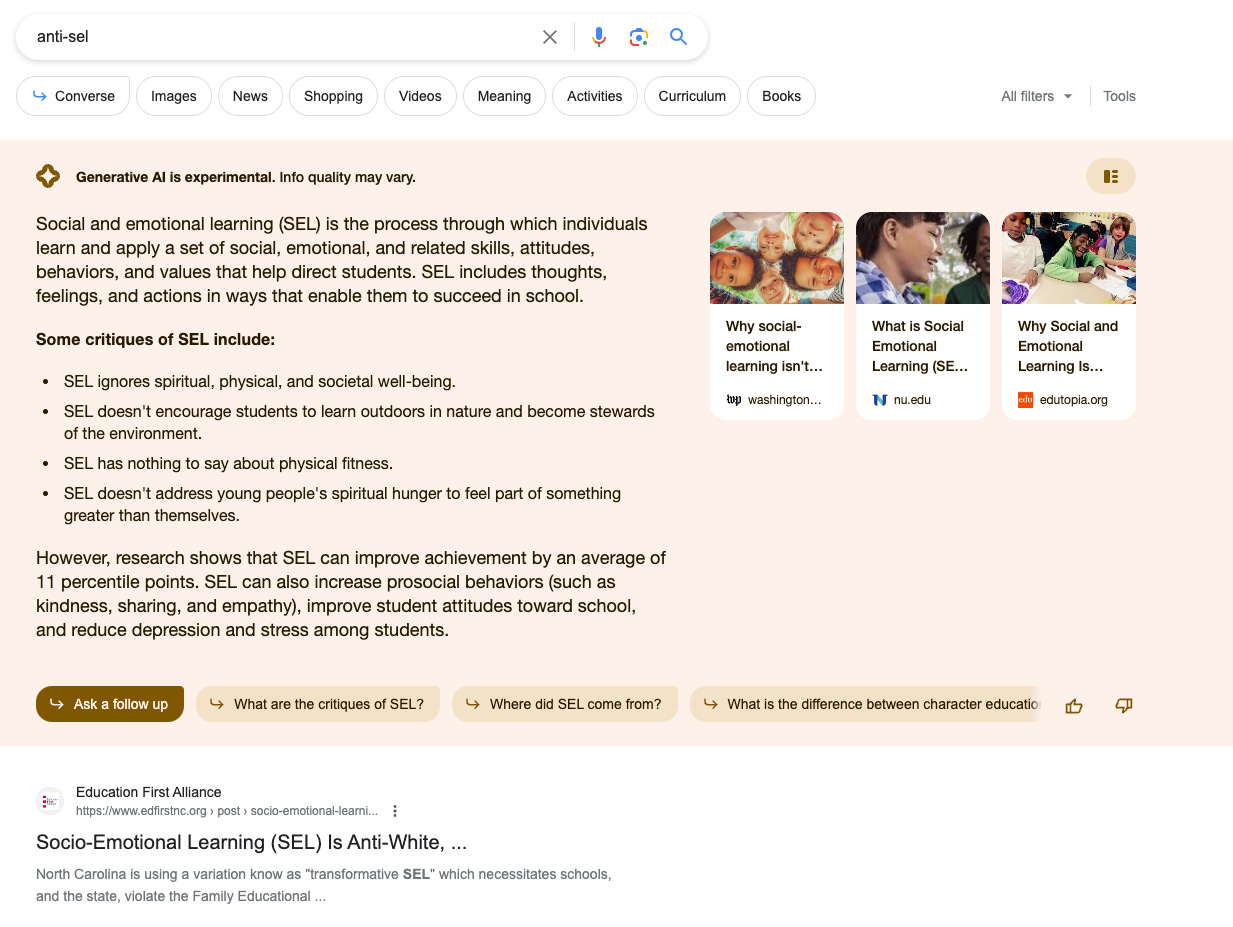

In some cases, Google seems to be filtering to keep answers objective and from a reputable source. There were a few instances where I used an obviously biased search, and Google attempted to steer a correction by including fact based evidence in an answer from either an established news source or a reputable, authoritative publisher (see the ‘anti-SEL’ result).

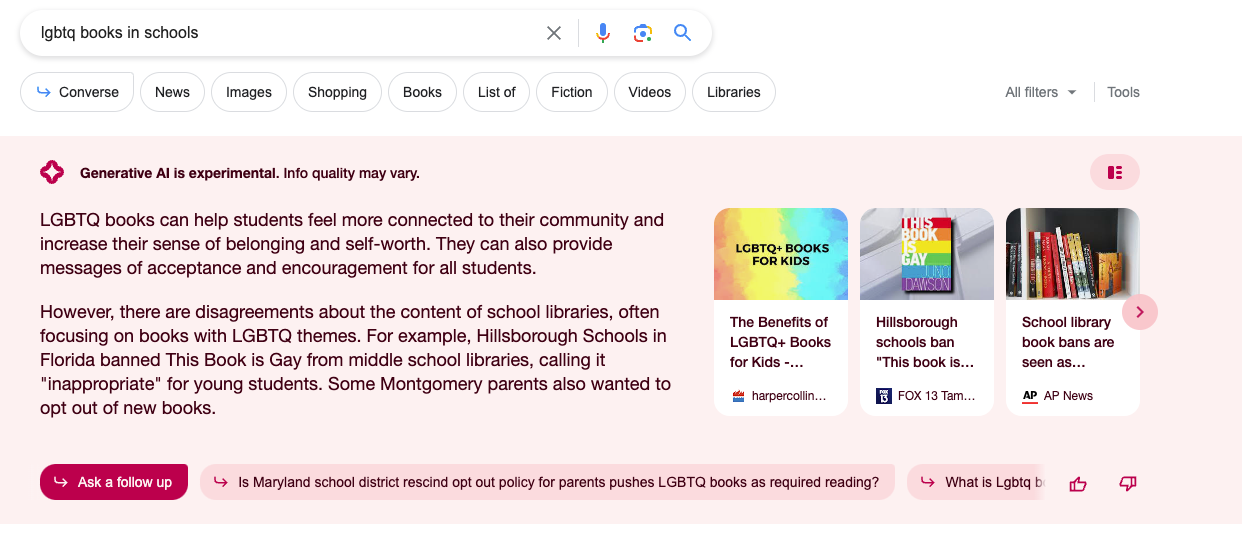

Some SGE responses were more problematic by providing vague and contextually irrelevant answers (maybe intentionally so?), and sometimes out of date. Don’t get me wrong, there are plenty of problematic responses that cite opposition content, but overall, SGE seems to side-step around biased conservative content in the AI overview feature. I’m going to remain cautious here, though.

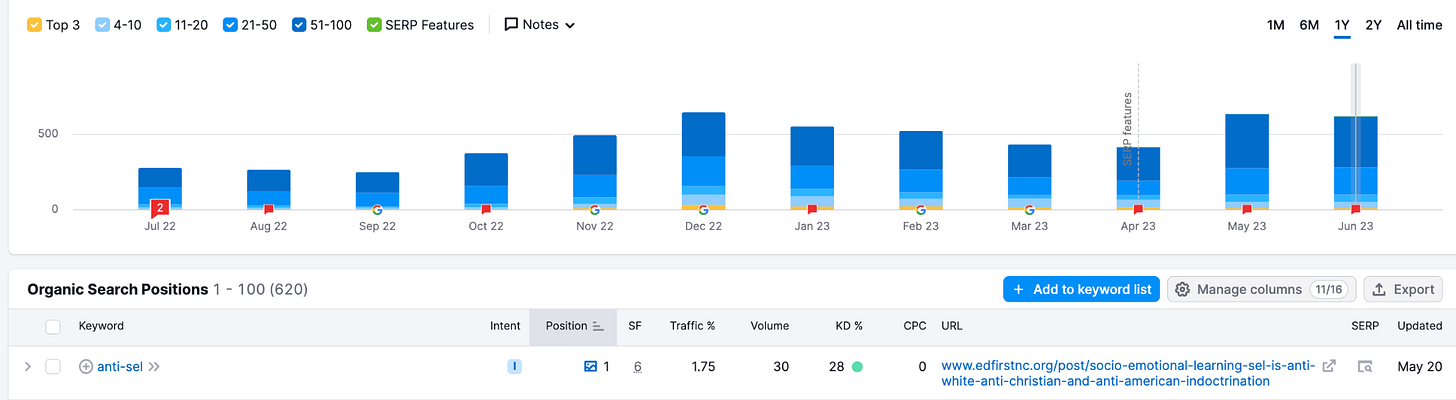

When looking at the same terms that opposition has been ranking well for and even appearing in the featured SERP snippet, in some cases, we’re seeing SGE AI overview answers bump opposition content down.

Here’s an example from one ranking organically in the first position and in the SERP feature for a very specific search that is obviously biased. But still, SGE seems to be curbing the accessibility to their content by bumping them from ultra priority real estate on the page (at least for those who have had SGE enabled).

I’m most interested the suggested searches in above result, and how often users will choose the recommended follow ups, as there’s been studies that show this influences a users’ research journey and perspectives. I’m also interested in criteria for designating the displayed related follow up questions. This should be a place for all of us digital progressives to pay attention to influencing, as well as the top 3 linked in the SGE box.

I am somewhat pleased, however, about the attempt to keep the generated answer objective with the inclusion of an answer from a reputable source about how SEL improves student achievement - from a biased search about anti-SEL.

In some cases, like this one, the related questions are directly taken from the ‘People Also Ask’ recommended searches, which is influenced by content. See the first recommended follow up search from this one - it’s shaped by M4L content: