Algorithmic Revival: How a 1990 Article on a Government Landing Page Was Weaponized for the 'Radical Left' Narrative

How outdated government content gets weaponized through backlink manipulation and AI training data

UPDATE [September 22, 2025]:

The White House designated Antifa as a "domestic terrorist organization," citing the need to combat "Radical Left violence." This represents the operational deployment of the information infrastructure documented below.

Within hours of Charlie Kirk's shooting at Utah Valley University on September 10, a familiar script began to play out. Conservative leaders rushed to blame the "radical left" for creating a climate of violence. Trump called for beating "the hell out of radical left lunatics." Senator Lindsey Graham spoke of leftist "dehumanization" of MAGA supporters. The usual suspects were saying the usual things.

But something else was happening beneath the surface. When Americans began searching for information about political violence and terrorism, they were being systematically steered toward a very specific narrative. One that treated "radical left" and "radical right" violence as equivalent threats, despite decades of data showing white supremacist violence dominating domestic terrorism statistics.

The evidence trail leads to a 35-year-old government document that had been digitally resurrected through a sophisticated manipulation campaign.

Zombie Page

Buried in the Office of Justice Programs archives sits an unremarkable landing page from 2021. It digitizes a 1990 article from Police Chief magazine: "Radical Right vs. Radical Left: Terrorist Theory and Threat."

This thing collected digital dust for years. Then something interesting happened starting in 2021, accelerating dramatically in 2024 and continued through 2025. It began accumulating backlinks like a magnet.

The scale is significant. Over 3,373 documented backlinks across 14 domains, with mass link volume generated by a single domain in May 2024 alone. This coordinated campaign transformed an obscure archive page into one of the most linked resources on the entire OJP website.

This wasn't organic interest in historical counterterrorism research. This was algorithmic manipulation, a deliberate campaign to resurrect outdated government content to serve convenient framing exactly when it would be most politically useful.

Amplification Machine

Temporal analysis revealed the following timeline pattern:

Phase 1 (2021-2022): Initial accumulation coinciding with the page's creation

Phase 2 (May 2024): Massive injection of over 3,300 links in a single week from one U.S.-based aggregator domain

Phase 3 (2025): Sustained trickle with targeted injections averaging 6-10 links monthly

A temporal link strategy was deployed, exploiting a core vulnerability in both search engine and AI content ranking systems. By flooding old pages with fresh backlinks during moments of peak information demand, manipulators can resurface and legitimize outdated content. Algorithms generally prioritize pages receiving surges of new backlinks, interpreting these spikes as evidence of current relevance.

Fresh backlinks signal to algorithms that content is currently important, regardless of when it was originally published. A 1990 article receiving hundreds of links in 2024 and 2025 gets interpreted as newly relevant information, not historical archives. The effect is shown here as an outdated government analysis gets algorithmically promoted as contemporary guidance exactly when Americans are seeking current information about political violence.

The anchor text and content analysis reveals more about the strategy and its sophistication. Across over 3,300 links and 14 domains, nearly all used raw URLs rather than descriptive text, a pattern characteristic of automation. But this wasn't just about scale. This technique maximizes the frequency of the URL string itself in web corpora and AI training datasets, increasing the probability that search engines and AI systems will surface this content.

The geographic analysis shows this was domestic manipulation, not foreign interference. The vast majority of linking domains were U.S.-based, suggesting a homegrown strategy of influence.

Archival Weaponization

What we're witnessing represents an evolution of archival weaponization, strategically resurrecting outdated official content to legitimize contemporary political narratives, now adapted for algorithmic systems and AI training data.

The approach exploits multiple vulnerabilities. Search algorithms treat government sources as more credible. Backlink manipulation makes old content appear newly relevant. Official archives provide seemingly "neutral" citations for partisan arguments. Manipulated content embeds in AI knowledge bases.

The genius is that it requires no new content creation. Instead, it turns the government's own historical documents into weapons against current fact-based assessment. This represents institutional laundering, exploiting official authority to legitimize outdated framings through technical manipulation of the information ecosystem.

The Reference Contamination Network

The OJP page amplification was just one component of a broader ecosystem manipulation. My analysis also revealed contamination across multiple reference sources that AI systems and search engines treat as authoritative:

Dictionary.com: Definitional Alignment

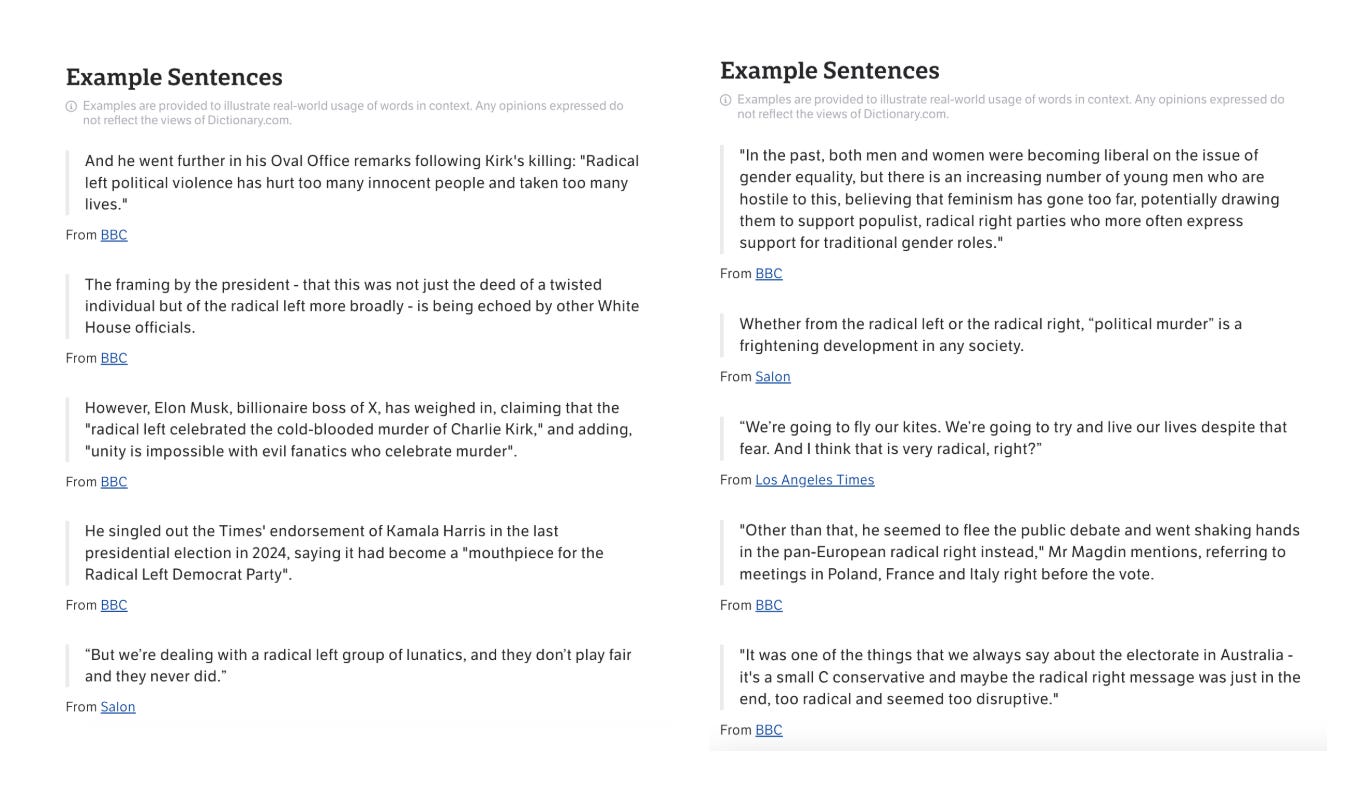

The Dictionary.com entry for "radical left" doesn't just provide a definition, it incorporates live partisan media quotes, including Trump's rhetoric about the "Radical Left Democrat Party" and Fox News claims linking the "radical left" to violence.

Compare this to the "radical right" entry, which offers a clinical description of fascism and supremacist movements without embedded partisan attacks. This asymmetry means that when AI systems or regular people look up these terms, they encounter political framing from a trusted, neutral reference resource.

This creates a particularly insidious feedback loop. Political actors deploy inflammatory language, media outlets amplify and repeat these narratives, then Dictionary.com embeds this repetition directly into their definitions as illustrative examples. AI systems, treating dictionary sources as authoritative, reference these contaminated definitions as evidence of legitimate usage. Web crawlers then index and amplify these partisan sources embedded within dictionary content, making them more likely to surface in search results and AI responses. The effect is narrative and source reinforcement through seeming objectivity. What started as partisan rhetoric gets laundered through a reference source, then cited by AI systems as proof of the narrative's validity. Platform, media, and political actors create a self-reinforcing cycle where partisan framing becomes embedded in the foundational language resources that shape public understanding.

Wikipedia: The AI Training Ground

Wikipedia's role in this manipulation ecosystem deserves special attention. My analysis of AI training datasets revealed Wikipedia as the dominant source for "radical left" queries, averaging 4.8 citations per response, more than any other reference source. Likely the single most influential source, Wikipedia's framing of terms like “radical left” “violent liberals” or “left-wing terrorism” becomes foundational because of its ubiquity and cross-linking. This makes Wikipedia's editorial dynamics extraordinarily influential in shaping both immediate public understanding and long-term AI knowledge.

The structural bias runs deep across multiple pages. "Far-left politics", the most referenced Wikipedia page for "radical left" queries, devotes entire sections to terrorism and militant violence. The "Radical left" disambiguation page compounds this by linking directly to Antifa entries, while "Left-libertarianism" pages connect academic political theory to "militant antifascism," creating multiple pathways between "radical left" searches and violent associations.

Real-Time Narrative Capture The Charlie Kirk shooting revealed how Wikipedia becomes a vehicle for instant partisan encoding of breaking news events. Within hours, three near-duplicate entries appeared simultaneously. "Shooting of Charlie Kirk," "Killing of Charlie Kirk," and "Assassination of Charlie Kirk".

Reaction Over Facts Wikipedia's most dangerous feature is its speed in encoding partisan narratives into apparent neutral fact. Within hours, entries tilted dramatically toward reaction and partisanship, giving disproportionate weight to conservative voices before any investigative confirmation.

Early Wikipedia coverage flooded with edits emphasizing Kirk's political identity, extensive documentation of Republican leader reactions while Democratic responses remained underdeveloped, and speculative language around motive despite minimal official confirmation. Republican voices were disproportionately cited, often amplifying unverified "radical left" framing, while law enforcement information and factual timelines remained sparse. The tone reflected media headlines more than encyclopedic analysis, but Wikipedia's authority made these rushed judgments seem like settled fact.

The Information Asymmetry Within 24 hours, Wikipedia's visibility in Google and AI answers means this reaction-heavy framing reaches searchers before neutral sources can respond. Anyone searching encountered Wikipedia's partisan framing first, creating information asymmetry and false consensus around conservative interpretations before facts emerged.

Since AI systems treat Wikipedia as authoritative training data, this real-time partisan capture becomes embedded in algorithmic knowledge. Wikipedia's speed advantage makes it the default of algorithmic memory, encoding partisan reactions as neutral knowledge before facts can catch up. The result becomes a self-reinforcing feedback loop where manipulated sources feed AI training, which shapes search results, which influences public perception, which justifies more manipulation. Wikipedia's speed advantage and trust in crisis moments makes it a dominant voice of public understanding and algorithmic memory, risking encoding partisan reactions as neutral knowledge before facts can catch up.

The AI Training Feedback Loop

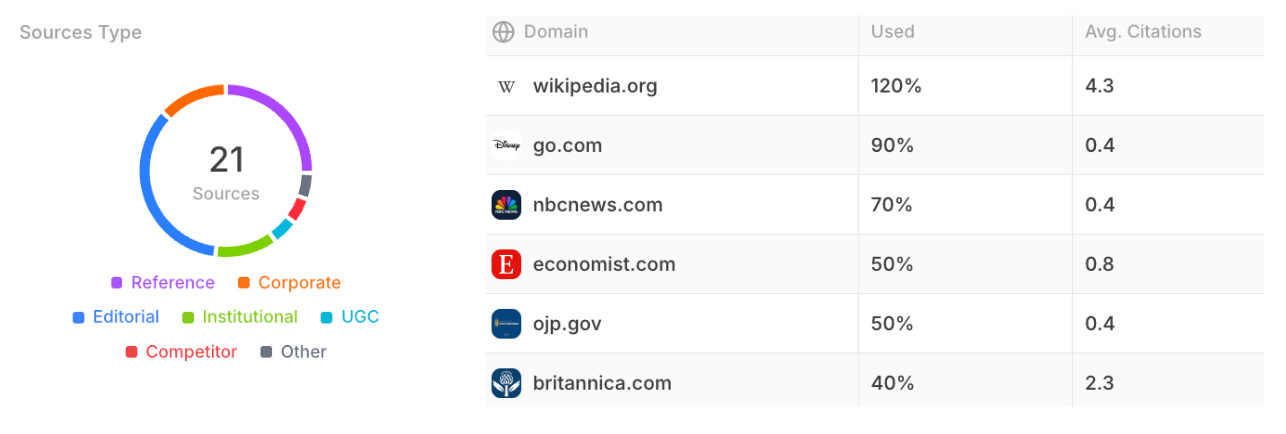

Analysis of source datasets for "radical left" and related queries revealed how systematically the contamination spreads across the knowledge ecosystem that trains AI systems:

Wikipedia dominated as the foundational source, establishing narrative frameworks around "radical left" political violence. The manipulated OJP page appeared as a top institutional source, lending government credibility to the notion of "radical left" violence as a genuine threat category. Dictionary.com provided contaminated definitions mixing neutral language with partisan attacks.

Mainstream media functioned as a conduit that transformed political messaging into training data, legitimizing partisan framing through news coverage credibility. Reddit discussions averaged 2.0 citations, feeding conversational patterns where "radical left" functions as a casual slur rather than political descriptor. This teaches AI systems both definitional and conversational bias.

The result is a self-reinforcing feedback loop where manipulated sources feed AI training, which shapes search results, which influences public perception, which justifies more manipulation.

The Perfect Timing

The manipulation's timing becomes obvious against the Charlie Kirk aftermath. When political leaders immediately deployed "radical left" language, they triggered predictable searches for context about political violence. Americans searching for information encountered a systematically biased information environment:

An artificially amplified government page treating "radical left" terrorism as equivalent to right-wing violence. Dictionary definitions loaded with partisan attack language. Wikipedia emphasizing left-wing militancy while downplaying right-wing threats. AI responses trained on this contaminated reference ecosystem.

Meanwhile, more recent OJP resources explicitly identifying white supremacy as the leading domestic terror threat have been removed, selective amplification and erasure reshaping the available evidence base to counter “radical left” violence in search narratives.

The Memory Wars

This manipulation represents more than influencing real-time public opinion, it's also about controlling algorithmic memory, determining what information systems "remember" and surface when people seek answers.

Traditional propaganda meant controlling media outlets or creating new content. This model hijacks existing authoritative sources and manipulates the technical infrastructure determining what gets seen and believed. The OJP page shows how fresh links to a 1990 article with outdated "both sides" framing can override decades of subsequent research and data.

What Comes Next

We’ve seen these exact strategies before. These techniques will expand beyond terrorism into any contested area where historical government documents contain politically useful language. Gender pay gap data. Climate change research from the 1990s. Economic analyses from previous decades. Any archival content becomes a resource for contemporary battles.

When official government sources become tools for political manipulation, the consequences ripple into daily life. Decisions about work, health, finances, and safety get based on information designed to serve narrow agendas rather than reflect reality. Once spin replaces facts in authoritative places, it becomes nearly impossible to know which sources to trust. This breeds confusion and makes people more vulnerable to mistakes and avoidable harm.

Citizens need to recognize that search results and AI responses do not inherently reflect fact - and often reflect ongoing technical manipulation campaigns, not just available information.

The Bigger Picture

The OJP page resurrection demonstrates how archival weaponization, while just one tactic within a broader manipulation infrastructure, has become a cornerstone strategy for controlling algorithmic systems. The strategies documented here have and will continue to spread to other topics where historical government documents can be exploited.

Charlie Kirk's shooting provided the crisis moment when invisible preparation paid dividends for information manipulators. But documentation creates accountability. Making these tactics visible reduces their effectiveness. Understanding how this infrastructure operates is the first step toward building resilience against manipulation.

The stakes are the future of evidence-based discourse in American democracy. That's worth fighting for, even when the fight seems impossible.

This analysis is based on technical research into AI training datasets, amplification and backlink patterns, search rankings, and content analysis across multiple platforms. All data sources and methodologies are available for independent verification.

If this resonates with you, I'd love to hear from you. Drop a comment or reach out directly.

If you think others need to see this, please share it. And subscribe if you want more honest conversations.